The Convex Boundary of Nonconvexity

Nonconvexity remains an optimization bogeyman. We solve nonconvex mixed integer problems (NP-complete!) reliably at scale for many applications. On the other hand, convex semidefinite programs––for which we know polynomial-time algorithms–-continue to terrorize PhD students.[1] The boundary between optimization problems we can solve and those we cannot is subtle.

So when is nonconvexity relatively benign? Some nonconvex problems can be transformed into easily-solved convex ones.[2] However, others can be tackled directly. In this post, I'll discuss one class of these problems: when you're dealing with the sum of many variables constrained to nonconvex sets. The Shapley–Folkman lemma tells us how closely these nonconvex problems resemble convex ones.

The Shapley–Folkman lemma

The Shapley-Folkman lemma essentially states that if you (Minkowski) sum[3] enough nonconvex sets together, the result looks closer and closer to the convex hull. I think of this lemma as a "central limit theorem" of convex geometry. The sets are might be poorly behaved on their own, but their sum is much nicer!

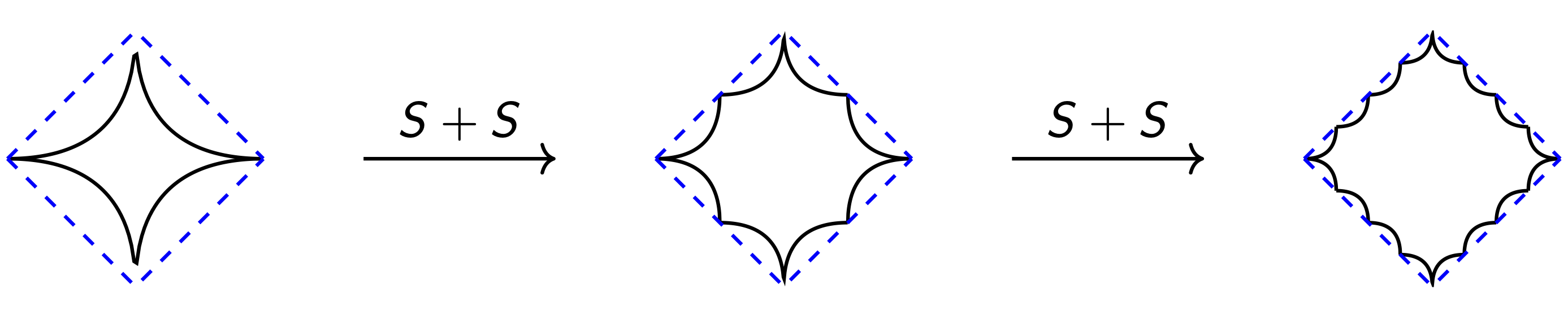

The figure below of the 1/2-norm ball, repeatedly summed with itself, gives the idea:

As the number of sets gets large, the sum becomes closer and closer to its convex hull. More precisely, take sets and let be the convex hull of their sum:

Then, for any vector , there exists vectors such that

and for at least indices ; the remaining 's lie in . In other words, given any vector , which lies in the sum of the convex hulls of the , we can find , which sum to , such that at least lie in the original sets , while the remainder lie in the convex hull of .

The lemma is surprisingly easy to prove, and I include proof at the bottom of this post.

Optimization with lots of nonconvex sets

According to this lemma, if the number of nonconvex sets in a problem is much larger than the problem dimension , the solution to a convex relaxation might be very close to a solution of the original nonconvex problem. These problems often look like this:

where is nonconvex. The specifics of a problem govern how you reconstruct a feasible solution from the relaxation. (This might be difficult.)

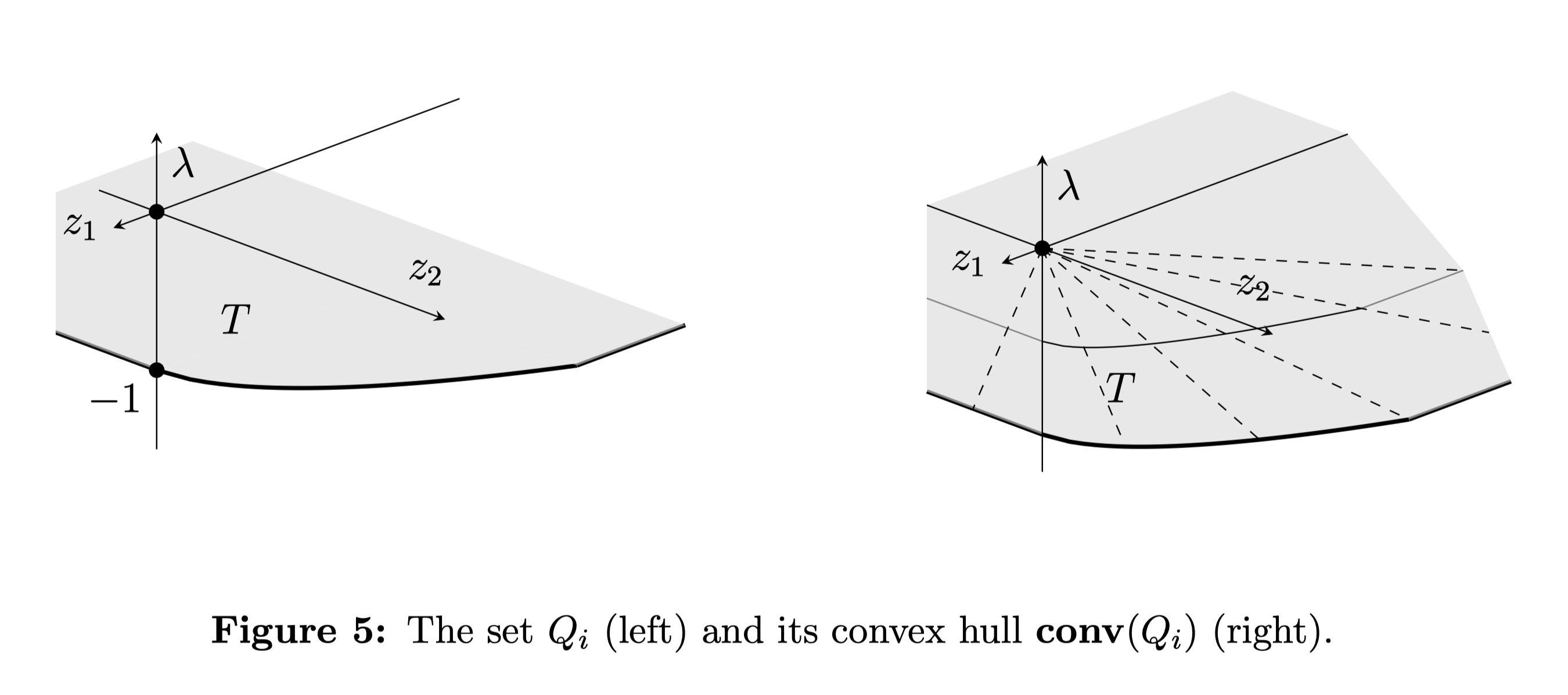

I've used this result for a network flow problem with fixed costs; to use an edge, I had to pay some positive cost. A set encoded the allowable flows over this edge. Adding an extra dimension for the cost, I get a nonconvex flow-cost set: the union of a point at zero (no flow and no cost) and the set of allowable flows at -1, shown below. (See section 5 of this paper for details.)

In many networks, the number of edges (the number of sets ) is much larger than the number of nodes (the net flow dimension ). Although fixed costs make this problem nonconvex, the relaxation often has an integral solution. And even when I had to round the solution to the relaxation, the difference in objective value between the relaxation solution (a lower bound) and the rounded solution (feasible, maybe not optimal) was typically well under 1%.

These types of problems sit right outside the boundary of convex optimization. Fortunately, the more nonconvex sets you have, the closer to this boundary you get.

Thank you to Logan Engstrom for helpful comments on a draft of this post.

Footnotes

| [1] | Don't get me started on those that take complexity theory as gospel. |

| [2] | That said, many of these transformations are not obvious. I wish there were better tools to automatically search for equivalent convex problems. |

| [3] | The Minkowski sum of sets and is . |

Proof of the lemma

This largely follows the proof in Bertsekas' Convex Optimization Theory.

Let so . Any is a sum of 's each in . Therefore,

where , , and for each . We can rewrite the above as

where . By Caratheodory's Theorem, we can write the left hand side as a sum of vectors. Since each is a convex combination of the 's, we much have some for each . There are only at most positive 's left. Thus, at most 's are not in (and are instead in ). (Equivalently, for indices)